😄 I am a Ph.D. student since Jan. 2023 at the University of Hong Kong, supervised by Taku Komura. I obtained my M.Sc. degree at Saarland University and Max Planck Institute for Informatics (supervisor: Marc Habermann) and B.Sc. degree at Shanghai Jiao Tong University.

I had a few wonderful research internship experiences at different companies and institutes, namely Adobe Research (2021, mentor: Yang Zhou), miHoYo (2020, mentor: Jun Xing), MPI-INF (2019, supervisor: Gerard Pons-Moll.)

My research interests lie in the intersection of computer vision and computer graphics. More specifically, I am interested in modeling and animating digital characters (including the body and the clothes) driven by data. My research goal is to develop algorithms that can free content creators from hard labours.

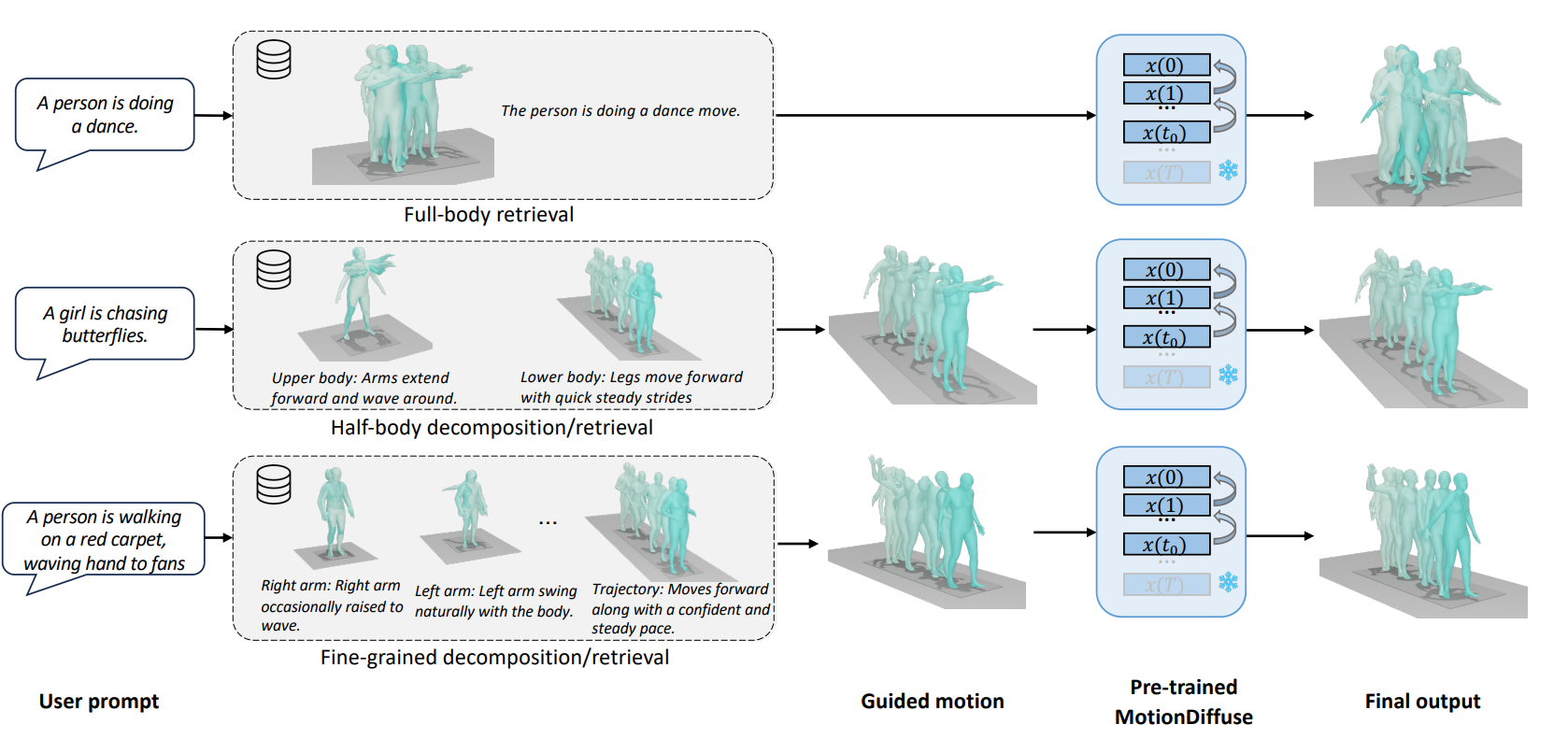

Zhouyingcheng Liao, Mingyuan Zhang, Wenjia Wang, Lei Yang, Taku Komura

Arxiv 2024

[paper]

A training-free retrieval-augmented system to generate 3D motion from text.

Mingyi Shi, Dafei Qin, Leo Ho, Zhouyingcheng Liao, Yinghao Huang, Junichi Yamagishi, Taku Komura

Arxiv 2024

[paper]

An online system for generating dynamic, interactive motions for two characters from speech audio.

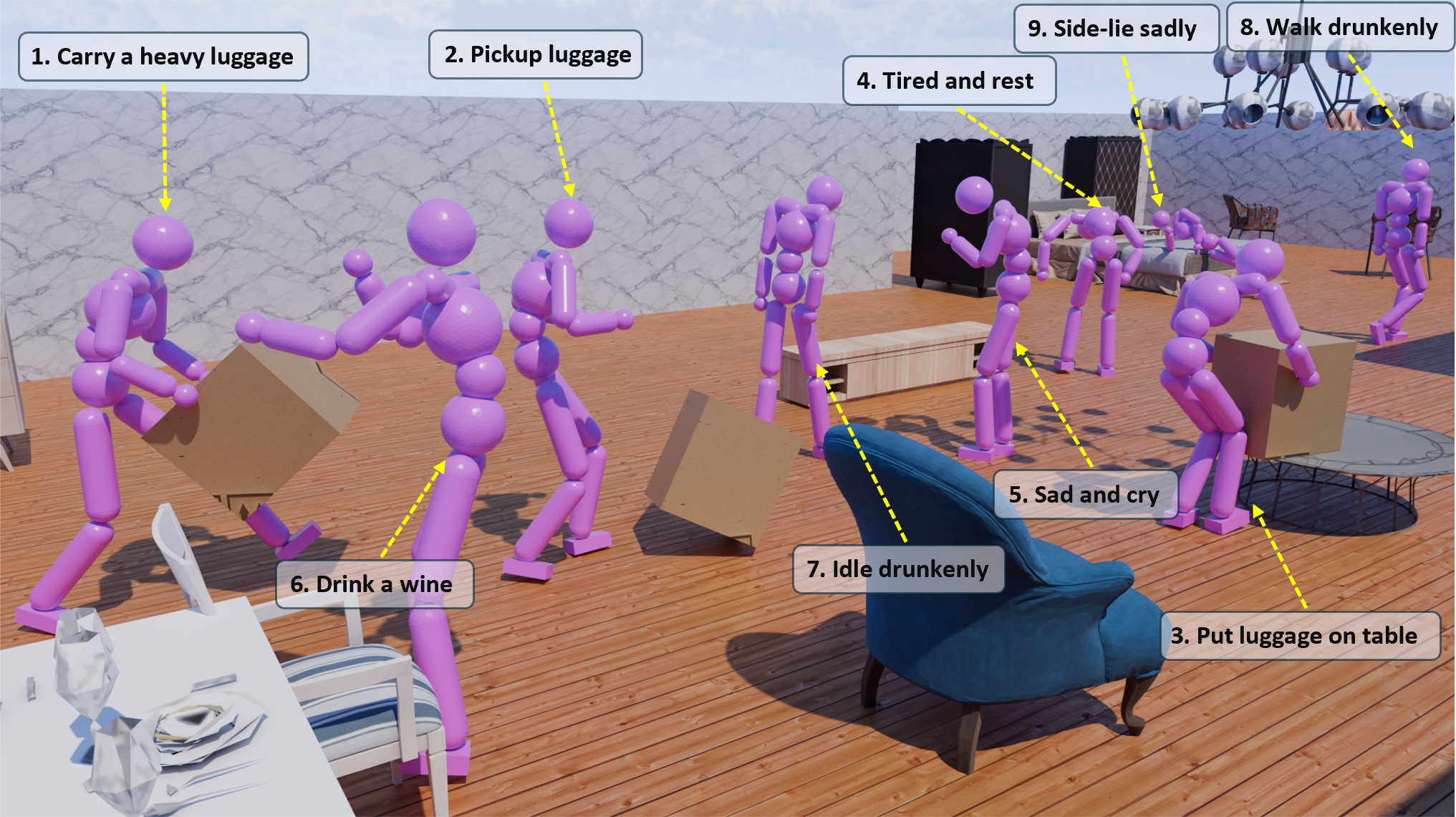

Wenjia Wang, Liang Pan, Zhiyang Dou, Jidong Mei, Zhouyingcheng Liao, Yuke Lou, Yifan Wu, Lei Yang, Jingbo Wang, Taku Komura

Arxiv 2024

[paper]

A novel framework combining script-driven intent via Retrieval-Augmented Generation with a versatile physics-based control policy to generate human-scene interactions.

Wenyang Zhou, Zhiyang Dou, Zeyu Cao, Zhouyingcheng Liao, Jingbo Wang, Wenjia Wang, Yuan Liu, Taku Komura, Wenping Wang, Lingjie Liu

ECCV 2024

A fast and high-quality human motion generation method, which takes only 0.05s for a sequence of 196 frames.

Zhouyingcheng Liao, Vladislav Golyanik, Marc Habermann, Christian Theobalt

CVPR 2024

[paper]

The first end-to-end method for generating a dense and rigged 3D character mesh with learned pose-dependent skinning weights solely from multi-view videos.

Zhouyingcheng Liao, Jimei Yang, Jun Saito, Gerard Pons-Moll, Yang Zhou

ECCV 2022

The first neural method that achieves automatic pose transfer between any stylized 3D characters, without any rigging, skinning or manual correspondence.

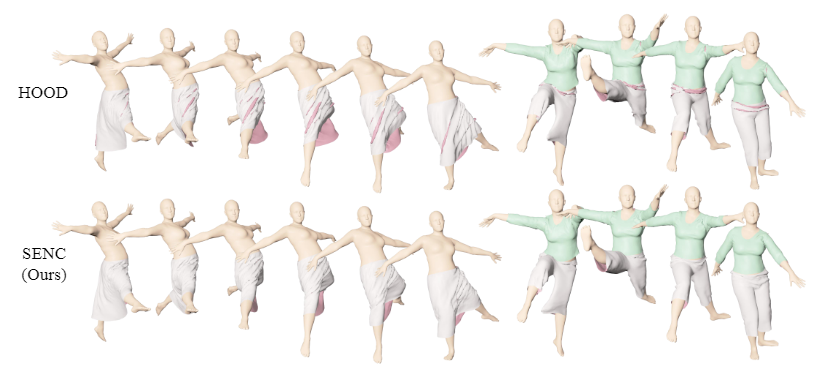

Chaitanya Patel*, Zhouyingcheng Liao*, Gerard Pons-Moll (*: co-first author)

CVPR 2020 oral

[webpage] [paper] [video] [code] [data]

TailorNet is the first neural model which predicts clothing deformation in 3D as a function of three factors: pose, shape and style (garment geometry), while retaining wrinkle detail. We also present a garment animation dataset of 55800 frames generated by physically based simulation

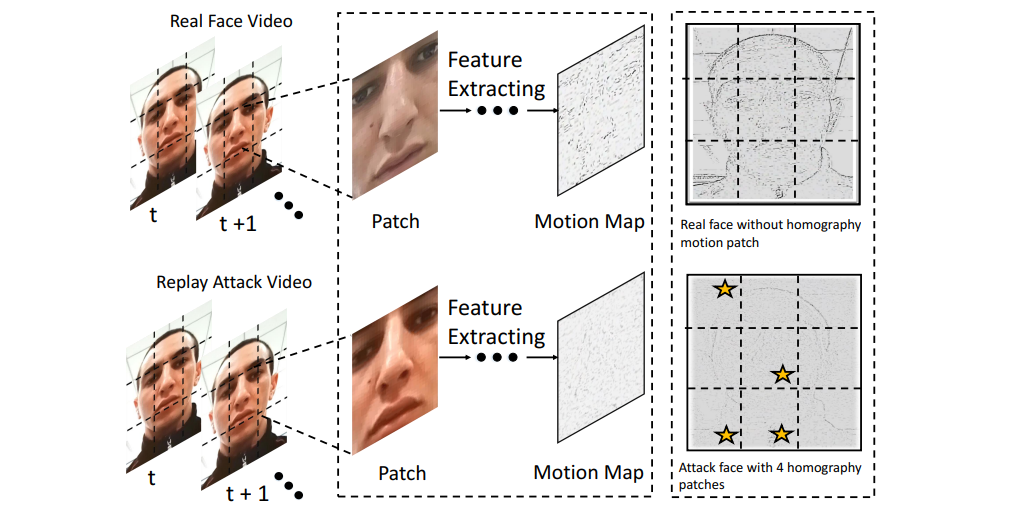

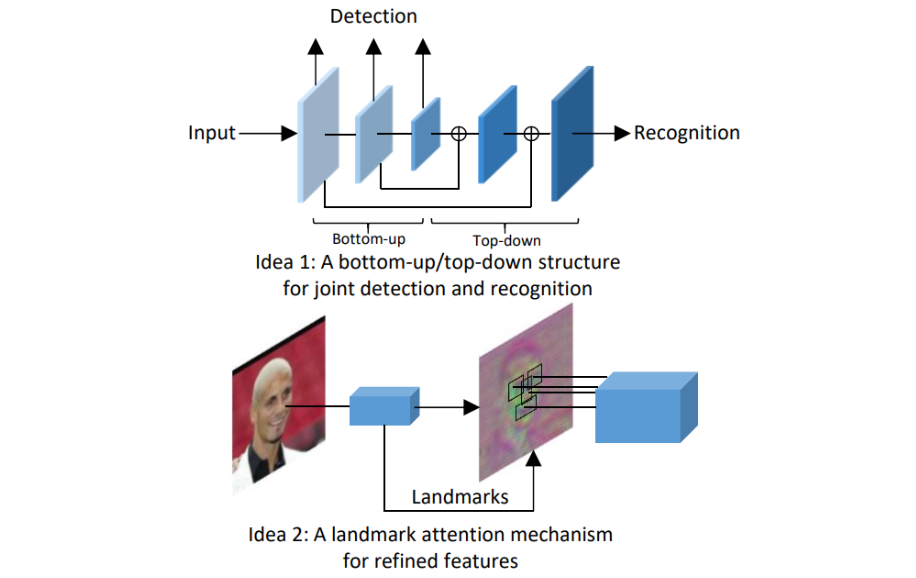

Chen Lin, Zhouyingcheng Liao, Peng Zhou, Jianguo Hu, Bingbing Ni

IJCAI 2018

[paper]

Zhouyingcheng Liao, Peng Zhou, Qinlong Wu, Bingbing Ni

ICPR 2018

[paper]

Siggraph Asia 2023, 2024

Eurographics 2024

CVPR 2023, 2024

TVCG

Computer & Graphics

Data-driven Computer Animation (COMP3360/7508@HKU) Spring 2024

Computer Game Design and Programming (COMP3329@HKU) Spring 2024

Computer Game Design and Programming (COMP3329@HKU) Spring 2023